Defective Altruism

Socialism is the most effective altruism. Who needs anything else? The repugnant philosophy of “Effective Altruism” offers nothing to movements for global justice.

The first thing that should raise your suspicions about the “Effective Altruism movement” is the name. It is self-righteous in the most literal sense. Effective altruism as distinct from what? Well, all of the rest of us, presumably—the ineffective and un-altruistic, we who either do not care about other human beings or are practicing our compassion incorrectly.

We all tend to presume our own moral positions are the right ones, but the person who brands themselves an Effective Altruist goes so far as to adopt “being better than other people” as an identity. It is as if one were to label a movement the Better And Smarter People Movement—indeed, when the Effective Altruists were debating how to brand and sell themselves in the early days, the name “Super Hardcore Do-Gooders” was used as a placeholder. (Apparently in jest, but the name they eventually chose means essentially the same thing.)

Effective Altruism can be defined as a “social movement,” a “research field,” or a “practical community.” The Center For Effective Altruism says it’s about “find[ing] the best ways to help others, and put[ting] them into practice,” and is now “applied by tens of thousands of people in more than 70 countries.” It has received coverage in leading media outlets (e.g., the New Yorker, TIME, and the New York Times), and $46 billion has apparently been committed to Effective Altruist causes, although most of that appears to be pledges from the same few billionaires.

When I first heard about Effective Altruism, back around 2013, it was pitched to me like this: We need to take our moral obligations seriously. If there is suffering in the world, it’s our job to work to relieve it. But it’s also easy to think you’re doing good while you’re actually accomplishing very little. Many charities, I was told, don’t really rigorously assess whether they’re actually succeeding in helping people. They will tell you how much money they spent but they don’t carefully measure the outcomes. The effective altruist believes in two things: first, not ignoring your duty to help other people (the altruism part), and second, making sure that as you pursue that duty, you’re accomplishing something truly meaningful that genuinely helps people (the effectiveness part).

Put this way, it sounded rather compelling. Some Effective Altruists started an organization, GiveWell, that tried to figure out which charities were actually doing a good job at saving lives and which were mostly hype. I thought that made plenty of sense. But I quickly saw qualities in EA that I found deeply off-putting. They were rigorously devoted to trying to quantify moral decisions, to decide what the mathematically morally superior course of action to take was. And as GiveWell says, while first they try to shift people from doing things that “feel” good to doing things that achieve good results, they then encourage them to ask, “How can I do as much good as possible?” Princeton philosophy professor Peter Singer’s 2015 EA manifesto is called The Most Good You Can Do, and EA is strongly influenced by utilitarianism, so they are not just trying to do good, but maximize the good (measured in some kind of units of goodness) that one puts out into the world.

To that end, I heard an EA-sympathetic graduate student explaining to a law student that she shouldn’t be a public defender, because it would be morally more beneficial for her to work at a large corporate law firm and donate most of her salary to an anti-malaria charity. The argument he made was that if she didn’t become a public defender, someone else would fill the post, but if she didn’t take the position as a Wall Street lawyer, the person who did take it probably wouldn’t donate their income to charity, thus by taking the public defender job instead of the Wall Street job she was essentially murdering the people whose lives she could have saved by donating a Wall Street income to charity.1 I recall that the woman to whom this argument was made became angry and frustrated, and the man making the argument took her anger and frustration as a sign that she simply could not handle the harsh but indisputable logic bombs he was dropping on her. (I don’t know whether she ultimately became a public defender.)

As it turns out, this argument is a popular one among EA types. Singer makes it in The Most Good You Can Do, saying that those who aim to earn high salaries but donate to charity are “to a greater extent than most people, living in accord with their values.” An early talk by William MacAskill, an Oxford philosopher who is one of EA’s leading intellectuals (and the subject of a recent New Yorker profile), was entitled “Want an ethical career? Become a banker.” Here’s a Stanford student arguing that while getting a corporate job and tithing some of your income may “clash with a lot of people’s intuitions about how to do good and even sound a lot like ‘selling out,’” and many might “see their best ways of doing good as being something along the lines of research, lobbying or civic engagement, perhaps through directly working for an advocacy organization or charity,” in fact “good work is ‘replaceable’ when someone else would have done it anyway” and “a lot of nonprofit work is in fact replaceable.” (The student went to work at a Silicon Valley software company.)2 80,000 Hours, a leading EA nonprofit that gives career advice, also favorably profiles a man named Sam Bankman-Fried, who “inspired by these arguments, founded one of the leading cryptocurrency exchanges” (in other words, started a business helping people commit fraud) and is now worth $22.5 billion. He has become a political mega donor, contributing to Joe Manchin, Joe Biden, and the DNC, among others, and trying to get Effective Altruists elected to office, plus of course supporting pro-crypto policies. [UPDATE: Bankman-Fried has since reportedly lost 94% of his net worth in a single day after the implosion of his cryptocurrency trading business. His firm is now being investigated by the SEC and DOJ. This sort of spectacular sudden collapse is not uncommon in crypto, which is one reason readers are advised to stay the hell away from it. This may well prove a major blow to Effective Altruism’s future funding.]

GiveWell also makes the case that nonprofit work is an “easy way out” and that those who do more “learning and deliberation” will realize that “creating economic value” in the for-profit sector may be the morally superior course:

“The conventional wisdom that ‘doing good means working for a nonprofit,’ in our view, represents an ‘easy way out’—a narrowing of options before learning and deliberation begin to occur. We believe that many of the jobs that most help the world are in the for-profit sector, not just because of the possibility of ‘earning to give’ but because of the general flow-through effects of creating economic value.”

The EA community is rife with arguments in defense of things that conflict with our basic moral intuitions. This is because it is heavily influenced by utilitarianism, which always leads to endless numbers of horrifying conclusions until you temper it with non-utilitarian perspectives (for instance, you should feed a baby to sharks if doing so would sufficiently reduce the probability that a certain number of people will die of an illness).3 Patching up utilitarianism with a bunch of moral nonnegotiables is what everyone ends up having to do unless they want to sound like a maniac, as Peter Singer does with his appalling utilitarian takes on disability. (“It’s not true that I think that disabled infants ought to be killed. I think the parents ought to have that option.”) Neuroscientist Erik Hoel, in an essay that completely devastates the philosophical underpinnings of Effective Altruism, shows that because EA is built on a utilitarian foundation, its proponents face two unpalatable options: get rid of the attempt to pursue the Quantitatively Maximum Human Good, in which case it is reduced to a series of banal propositions not much more substantive than “we should help others,” or keep it and embrace the horrible repugnant conclusions of utilitarian philosophy that no sane person can accept. (The approach adopted by many EAs is to hold onto the repugnant conclusions, but avoid mentioning them too much.) Hoel actually likes most of what Effective Altruists do in practice (I don’t), but he says that “the utilitarian core of the movement is rotten,” which EA proponents don’t want to hear, because it means the problems with the movement “go beyond what’s fixable via constructive criticism.” In other words, if Hoel is right—and he is—the movement’s intellectual core is so poisoned by bad philosophy as to be unsalvageable.

It’s easy to find plenty of odious utilitarian “This Horrible Thing Is Actually Good And You Have To Do It” arguments in the EA literature. MacAskill’s Doing Good Better argues that “sweatshops are good for poor countries” (I’ve responded to this argument before here) and we should buy sweatshop goods instead of fair trade products. He also co-wrote an article on why killing Cecil the lion was a moral positive and argued against participating in the “ice bucket challenge” that raised millions for the ALS Association (not because it was performative, but because he did not consider the ALS Association the mathematically optimal cause to raise money for). Here’s a remarkable Effective Altruist lecture called “Invest in Evil to Do More Good?” arguing that people who think tobacco companies do harm should get jobs in them, and organizations devoted to preventing climate change should invest in fossil fuel companies:

“How should a foundation whose only mission is to prevent dangerous climate change invest their endowment? Surprisingly, in order to maximize expected utility, it might use ‘mission hedging’ investment principles and invest in fossil fuel stocks. This way it has more money to give to organisations that combat climate change when more fossil fuels are burned, fossil fuel stocks go up and climate change will get particularly bad. When fewer fossil fuels are burnt and fossil fuels stocks go down—the foundation will have less money, but it does not need the money as much. Under certain conditions the mission hedging investment strategy maximizes expected utility. … So generally, if you want to do more good, should you invest in ‘evil’ corporations with negative externalities? Corporations that cause harm such as those that sell arms, tobacco, factory farmed meat, fossil fuels, or advance potentially dangerous new technology? Here I argue that, though perhaps counterintuitively, that this might be the optimal investment strategy. … It might be a good strategy for donors or even other entities such as governmental organisations that are concerned with global health to invest in tobacco corporations and then give the profits to tobacco control lobbying efforts. Another example might be that animal welfare advocates should invest in companies engaged in factory farming such as those in [the] meat packing industry, and then use the profits to invest in organisations that work to create lab grown meat.”

MacAskill wrote a moral philosophy paper arguing that even if we “suppose that the typical petrochemical company harms others by adding to the overall production of CO2 and thereby speeding up anthropogenic climate change” (a thing we do not need to “suppose”), if working for one would be “more lucrative” than any other career, “thereby enabling [a person] to donate more” then “the fact that she would be working for a company that harms others through producing CO2” wouldn’t be “a reason against her pursuing that career” since it “only makes others worse off if more CO2 is produced as a result of her working in that job than as a result of her replacement working in that job.” (You can of course see here the basic outlines of an EA argument in favor of becoming a concentration camp guard, if doing so was lucrative and someone else would take the job if you didn’t. But MacAskill says that concentration camp guards are “reprehensible” while it is merely “morally controversial” to take jobs like working for the fossil fuel industry, the arms industry, or making money “speculating on wheat, thereby increasing price volatility and disrupting the livelihoods of the global poor.” It remains unclear how one draws the line between “reprehensibly” causing other people’s deaths and merely “controversially” causing them.)4

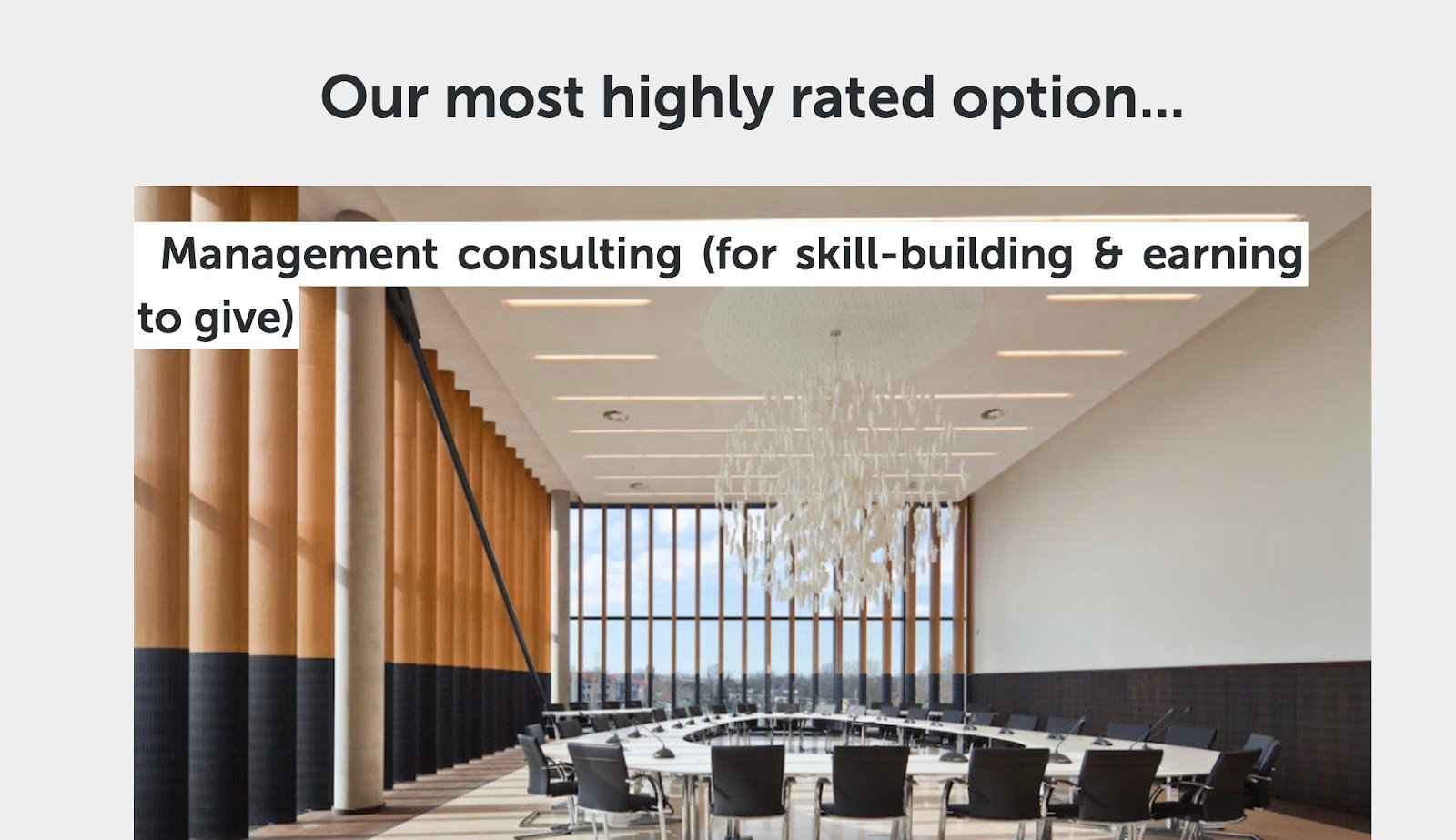

In fact, 80,000 Hours, the EA organization that advises people on how to do the most good with their careers, actually developed a career quiz to help would-be Super Hardcore Do-Gooders figure out how to find a job that gives something to the community. When I took the quiz, it informed me that my best option was to become a management consultant:

When I changed my answers to say that I sucked at math and science and was also bad at public speaking and writing, it said I should join the civil service.5

In recent years, EA’s exponents have apparently begun to de-emphasize this aspect of the program, perhaps because the rising tide of left-wing populism meant that it became pragmatically unwise to try to pitch change-oriented young people on the moral case for investment banking and Big Tobacco. The “optics” of the Actually, Bankers Who Do Philanthropy Are Morally Better Than Social Workers argument were terrible, and MacAskill (who is very worried about “damaging my brand, or the brand of effective altruism”)6 admitted that giving too much “prominence” to the argument “may have been a mistake.” (The career quiz has now been taken offline, but you can still access an archived version.)

Effective Altruism’s focus has long been on philanthropy, and its leading intellectuals don’t seem to understand or think much about building mass participation movements. The Most Good You Can Do and Doing Good Better, the two leading manifestos of the movement, focus heavily on how highly-educated Westerners with decent amounts of cash to spare might decide on particular career paths and allocate their charitable donations. Organizing efforts like Fight For 15 and Justice For Janitors do not get mentioned. Doing Good Better concludes with a what to do now section on how to actually become an effective altruist. It contains four suggestions: (1) regularly give to charity, (2) “write down a plan for how you’re going to incorporate effective altruism into your life,” with the listed possibilities being giving to charity, “chang[ing] what you buy,” and changing careers (perhaps into management consulting!) (3) “Join the effective altruism community” by signing up for its mailing list, and (4) “Tell others about effective altruism” by evangelizing to “friends, family, and colleagues,” since “if you can get one to make the same changes you make, you’ve doubled your impact.” That’s it. That’s the advice.

I have to say, my own instinct is that all of this sounds pretty damned in-effective in terms of how much it is likely to solve large-scale social problems, and both MacAskill and Singer strike me as being at best incredibly naive about politics and social action, and at worst utterly unwilling to entertain possible solutions that would require radical changes to the economic and political status quo. (MacAskill and Singer are not even the worst, however. In 2019 I was shown an EA voting guide for the Democratic presidential primary which did a bunch of math and then concluded that Cory Booker was the optimal candidate to support, one of the reasons being that he was in favor of charter schools. Sadly, the guide has since been taken offline.) If EA had been serious about directing money toward the worthiest cause, it would have been much more interested from the start in the state’s power to redistribute wealth from the less to the more worthy. After all, if your view is that people who work on Wall Street should give some of their money away, relying on them to make individual moral decisions is very ineffective (especially since most of them are sociopaths). You know what’s better than going and working on Wall Street yourself? Getting a tax put in place, so that the state just reaches into their pocket and moves the money where it can do more good. Taking rich people’s money by force and spending it is far more effective than having individual do-gooders work for 30 years on Wall Street so they can do some philanthropy on the side (and relying on them to maintain their commitments). In his The Life You Can Save, Singer, who has defended high CEO salaries and is dismissive of those who call for “more revolutionary change,” adopts a highly individualistic approach in which the central moral question facing us is how we should spend our money. At the end of the book he offers a seven-point plan to “make you part of the solution to world poverty.” The steps are:

- Visit www.TheLifeYouCanSave.com and pledge to give a portion of your income to charity

- “Check out some of the links on the website, or do your own research, and decide to which organization or organizations you will give.”

- Work out how much of your income you will be giving and how often you will give it, then do it.

- “Tell others what you have done” using “talk, text, e-mail, blog, use whatever online connections you have.”

- Ask your employer to set up a scheme that automatically donates 1 percent of everyone’s income to charity unless they choose to opt out. (Your coworkers are going to love you for this one. Note that it comes out of workers’ incomes rather than corporate profits.)

- “Contact your national political representatives and tell them you want your country’s foreign aid to be directed only to the world’s poorest people.” This is, presumably, Singer’s definition of doing politics.

- “Now you’ve made a difference to some people living in extreme poverty. ”

This is certainly the kind of poverty-reduction plan one would expect from someone focused on individual actions and not on movement politics. The likelihood that it will end poverty is approximately nil.

Brian Berkey, in a paper defending EA against the criticism that it doesn’t care enough about systemic change, suggests that this isn’t really a critique of Effective Altruism, because it’s being made using effective altruist premises. In other words, if you say “effective altruists don’t realize that systemic change rather than individual charity is the most effective way to help people,” theoretically Effective Altruists should be comfortable with this criticism, because it can be construed as an internal debate over what kinds of actions do quantitatively maximize the amount of good. In fact, you could technically be a socialist Effective Altruist, someone who has just concluded that being part of the long-term project to replace capitalist institutions is ultimately the best way to maximize human flourishing. I believe that conclusion myself, and I share in common with the EA people a desire to meaningfully make the world better.

But we might here distinguish between as a general matter caring about other people and wanting to make sure your actions have good consequences and the actually-existing Effective Altruism movement, comprised of particular public intellectuals, organizations, programs, and discussion groups. Reading Singer and MacAskill’s manifestos, it’s pretty clear that they don’t know much about politics and aren’t very interested in it (although that EA crypto-billionaire has certainly gotten political, and Singer ran as an Australian Green Party candidate in 1996 and achieved 2.9 percent of the vote). Berkey notes that the “systemic change” critique is compatible with Effective Altruist premises, which is true, but it’s impossible to say that the movement as it actually exists is serious about the possible value of social change through social movements. Instead, its proponents tend to spend a lot of time debating how rich people should spend their money and what their think tanks should work on.

The actually-existing EA movement is concerned with some issues that are genuinely important. They tend to be big on animal rights, for instance. But I’m an animal rights supporter, too, and I’ve never felt inclined to be an Effective Altruist. What does their movement add that I don’t already believe? In many cases, what I see them adding is an offensive, bizarre, and often outright deranged set of further beliefs, and set of moral priorities that I find radically out of touch and impossible to square with my basic instincts about what goodness is.

For instance, some EA people (including the 80,000 Hours organization) have adopted a deeply disturbing philosophy called “longtermism,” which argues that we should care far more about the very far future than about people who are alive today. In public discussions, “longtermism” is presented as something so obvious that hardly anyone could disagree with it. In an interview with Ezra Klein of the New York Times, MacAskill said it amounted to the idea that future people matter, and because there could be a lot of people in the future, we need to make sure we try to make their lives better. Those ideas are important, but they’re also not new. “Intergenerational justice” is a concept that has been discussed for decades. Of course we should think about the future: who do you think that socialists are trying to “build a better world” for?

So what does “longtermism” add? As Émile P. Torres has documented in Current Affairs and elsewhere, the biggest difference between “longtermism” and old-fashioned “caring about what happens in the future” is that longtermism is associated with truly strange ideas about human priorities that very few people could accept. Longtermists have argued that because we are (on a utilitarian theory of morality) supposed to maximize the amount of well-being in the universe, we should not just try to make life good for our descendants, but should try to produce as many descendants as possible. This means couples with children produce more moral value than childless couples, but MacAskill also says in his new book What We Owe The Future that “the practical upshot of this is a moral case for space settlement.” And not just space settlement. Nick Bostrom, another EA-aligned Oxford philosopher whose other bad ideas I have criticized before, says that truly maximizing the amount of well-being would involve the “colonization of the universe,” and using the resulting Lebensraum to run colossal numbers of digital simulations of human beings. You know, to produce the best of all possible worlds.

These barmy plans (a variation on which has been endorsed by Jeff Bezos) sound like Manifest Destiny: it is the job of humans to maximize ourselves quantitatively. If followed through, they would turn our species into a kind of cancer on the universe, a life-form relentlessly devoted to the goal of reproducing and expanding. It’s a horrible vision, made even worse when we account for the fact that MacAskill entertains what is called the “repugnant conclusion”—the idea that maximizing the number of human beings is more important than ensuring their lives are actually very good, so that it is better to have a colossal number of people living lives that are barely worth living than a small number of people who live in bliss.

Someone who embraced “longtermism” could well feel that it’s the duty of human beings to forget all of our contemporary problems except to the extent that they affect the chances that we can build a maximally good world in the very far distant future. Indeed, MacAskill and Hilary Greaves have written that “for the purposes of evaluating actions, we can in the first instance often simply ignore all the effects contained in the first 100 (or even 1,000) years, focussing primarily on the further-future effects. Short-run effects act as little more than tie-breakers.” To that end, longtermism cares a lot about whether humans might go extinct (since that would prevent the eventual creation of utopia), but not so much about immigration jails, the cost of housing, healthcare, etc. Torres has noted that the EA-derived longtermist ideas have a lot in common with other dangerous ideologies that sacrifice the interests of people in the here and now for a Bright, Shining Tomorrow.

Torres even quotes EA thinkers downplaying the catastrophe of climate change on the grounds that because it probably won’t render us outright extinct, it should be less of a priority. Indeed, the 80,000 Hours website is fairly “meh” on the question of whether a young Super Hardcore Do-Gooder should consider trying to stop climate change, and notes that “nothing in the IPCC’s report suggests that civilization will be destroyed.” Elsewhere, it tells us that climate change does not affect what should be our “first priority,” the avoidance of outright extinction, because “it looks unlikely that even 13 degrees of warming would directly cause the extinction of humanity.”7 While admitting that the effects of climate change will be dire, 80,000 Hours says if you choose to work on it you probably won’t be as morally good as someone who works on certain other issues:

“We’d love to see more people working on this issue, but—given our general worldview—all else equal we’d be even more excited for someone to work on one of our top priority problem areas. … Climate change is far less neglected than other issues we prioritise. Current spending is likely over $640 billion per year. Climate change has also received high levels of funding for decades, meaning lots of high-impact work has already occurred. … [F]or all that climate change is a serious problem, there appear to be other risks that pose larger threats to humanity’s long-term thriving.”

Yeah, other people clearly have the climate problem under control. You should consider working on a higher-priority concern. (Note: this is not true in the least, the claims about climate science here are not correct, and we desperately need a larger and more effective climate justice movement.)

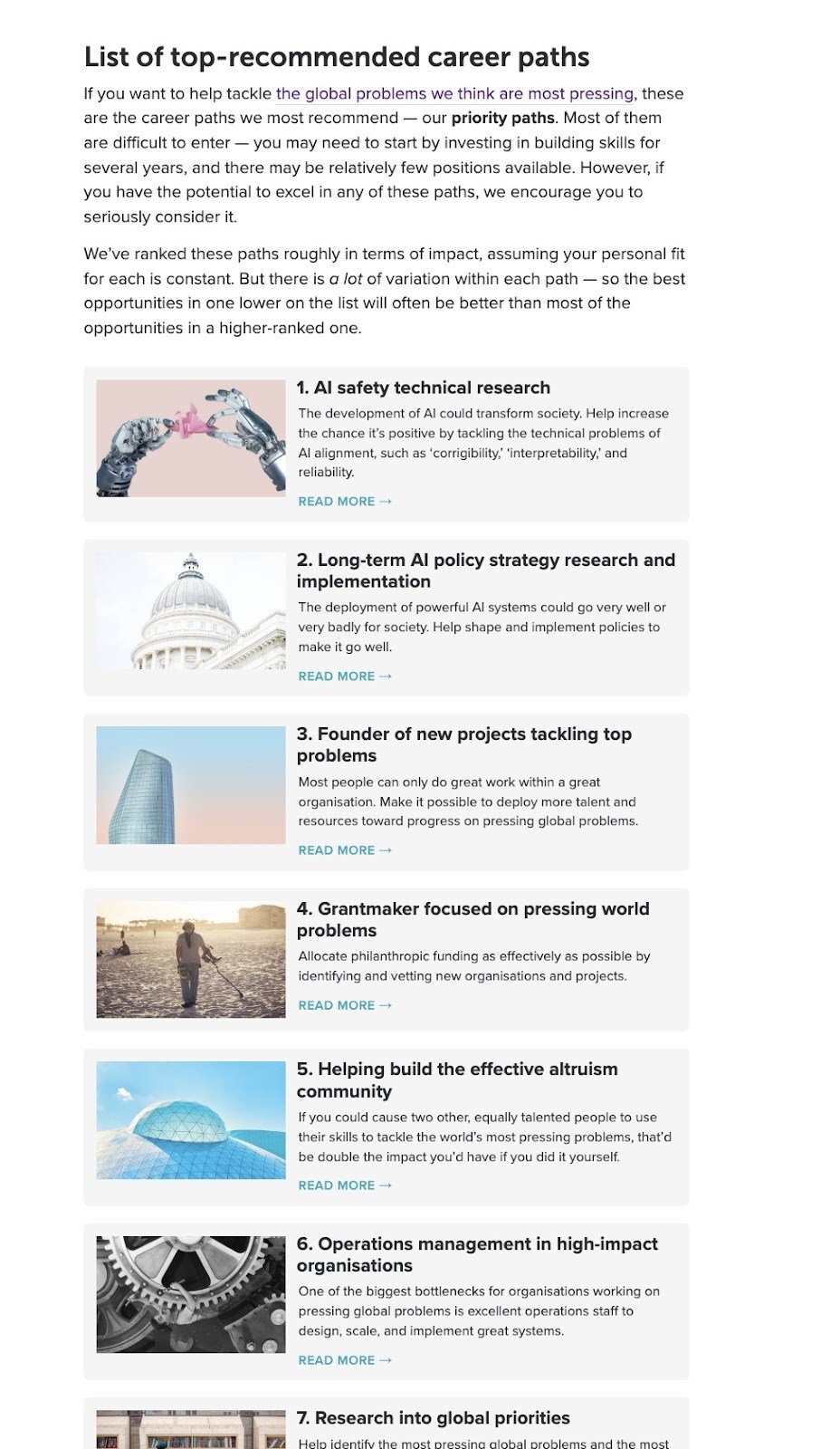

What, then, does EA say is our highest priority, if it’s not the unfolding climate catastrophe? Fortunately 80,000 Hours has produced a list of the “highest priority areas” as determined by scrupulous research. They are:

- Positively shaping the development of artificial intelligence

- Global priorities research

- Building effective altruism

- Reducing global catastrophic biological risk

80,000 Hours does say there are other issues that “we haven’t looked into as much” but “seem like they could be as pressing,” and a “minority” of people should “explore them.” These are “mitigating great power conflict,” “global governance,” “space governance,” and a “list of other issues that could be top priorities.” Beneath this is a list of secondary priorities, where “we’d guess an additional person working on them achieves somewhat less impact than work on our highest priorities, all else equal.” These are “nuclear security,” “improving institutional decision-making,” and “climate change (extreme risks).”

Let’s consider the EA list of priorities. I will leave aside #1 (artificial intelligence) for the moment and return to it shortly. The 2nd priority is researching priorities. Again, this in a time when to me and many others, our priorities seem pretty fucking clear (stopping war, climate disaster, environmental degradation, and fascism, and giving everyone on Earth nice housing, clean air and water, good food, health, education, etc.) But no, EA tells us that researching priorities is more pressing than, for example, “nuclear security.” So is #3, Building Effective Altruism. Because there aren’t many Effective Altruists, and of course EA is about optimizing the amount of good in the world, “building effective altruism is one of the most promising ways to do good in the world.” This sets up a rather amusing kind of “moral pyramid scheme” (my term, not theirs) in which each person can do good by recruiting other people to recruit other people to do good by recruiting other people. This is considered a more obvious moral priority than stopping warfare between nuclear-armed great powers.

The final highest priority, #4, is one that does make quite a bit of sense. 80,000 Hours talks about the risk of pandemics, and to their great credit, they were warning about pandemics long before the COVID-19 disaster. But even on this useful issue, the utilitarian philosophy of EA introduces a strange twist, which is an emphasis on preventing the kind of a pandemic that would render the human race extinct. Because of EA’s utilitarianism (and the various repugnant conclusions it leads to), stopping humans from going extinct is considered much more important than anything else (because if we go extinct, we are preventing many future Units Of Well-Being from coming into existence). They are quite clear on this:

“In this new age, what should be our biggest priority as a civilization? Improving technology? Helping the poor? Changing the political system? Here’s a suggestion that’s not so often discussed: our first priority should be to survive. So long as civilisation continues to exist, we’ll have the chance to solve all our other problems, and have a far better future. But if we go extinct, that’s it.”

Personally, I don’t really give this kind of importance to stopping human extinction. (Let the manatees have the planet, I say!) It’s more important for me to make sure the humans that do exist have good lives than to make sure humanity keeps existing forever.8

But 80,000 Hours’ focus on extinction means their idea of the most critical risk of a pandemic is that there will be zero humans at the end of it.. They explain that “pandemics—especially engineered pandemics—pose a significant risk to the existence of humanity” and while “billions of dollars a year are spent on preventing pandemics … little of this is specifically targeted at preventing biological risks that could be existential.” What they’re talking about is a subcategory of risks within “biosecurity and pandemic preparedness” called “global catastrophic biological risks,” which are the ones that might actually wipe out the species altogether. Fortunately, the work that would prevent those extreme “engineered pandemics” does overlap with the work that would deal with pandemics and biological weapons more generally, and 80,000 Hours mentions the need to restrict biological weapons, limit the engineering of dangerous pathogens, and create “broad-spectrum testing, therapeutics, and other technologies and platforms that could be used to quickly test, vaccinate, and treat billions of people in the case of a large-scale, novel outbreak.” All very important and helpful.

But there’s very little here about, say, ensuring that poor people receive the same kind of care as rich people during pandemics. In fact, since the reason for focusing so much on pandemics in particular is to avoid outright extinction, issues of equity simply don’t matter terribly much, except to the extent that they affect extinction risk. Bostrom has said that on the basic utilitarian framework, “priority number one, two, three, and four should … be to reduce existential risk.” (Torres notes that Bostrom is dismissive of “frittering” away resources on “feel-good projects of suboptimal efficacy” like helping the global poor.) Torres points out that not only does this philosophy mean you are “ethically excused from worrying too much about sub-existential threats like non-runaway climate change and global poverty,” but one prominent Effective Altruist has even gone so far as to argue that “saving a life in a rich country is substantially more important than saving a life in a poor country, other things being equal.”

So even in the one domain where Effective Altruists (at least those at 80,000 Hours) appear to be advocating a core priority that makes a lot of sense, the appearance is deceptive, because all of it is grounded in the idea that the most important problem with pandemics is that a sufficiently bad one might cause humanity to go extinct. Should we work on establishing universal healthcare? Well, how does it affect the risk that all of humanity will go extinct?

But I have not yet discussed the #1 priority that many Effective Altruists think we need to deal with: artificial intelligence. When it comes to 80,000 Hours’ top career recommendations: working on artificial intelligence “safety” is right at the top of the list:

In recent years, dealing with the “threat” posed by artificial intelligence has become very, very important in EA circles. One Effective Altruist reviewer of MacAskill’s What We Owe The Future says the book understates the degree to which people in the community think artificial intelligence is the most important risk faced by humanity, and readers might feel “bait-and-switched” if they are lured into Effective Altruism by the platitudes about caring about the future and then discover the truth that EA people are very preoccupied with artificial intelligence. Holden Karnofsky, who co-founded the EA charity evaluator GiveWell, says that while he used to work on trying to help the poor, he switched to working on artificial intelligence because of the “stakes”:

“The reason I currently spend so much time planning around speculative future technologies (instead of working on evidence-backed, cost-effective ways of helping low-income people today—which I did for much of my career, and still think is one of the best things to work on) is because I think the stakes are just that high.”

Karnofsky says that artificial intelligence could produce a future “like in the Terminator movies” and that “AI could defeat all of humanity combined.” Thus stopping artificial intelligence from doing this is a very high priority indeed.

Now, I think it tells you quite a lot about Effective Altruism that someone can say in all seriousness “I’ve decided to stop working on evidence-backed poverty relief programs and start working on stopping Skynet from The Terminator, because I think it is the most rational use of my time.” But EA people are dead serious about this. They think that there is a significant risk that computers will rise up and destroy humanity, and that it is urgent to stop them from doing so.

The argument that is usually made is something like: well, if the computation power of machines continues to increase, soon they will be as intelligent as humans, and then they might make themselves more intelligent, to the point where they are super-intelligent, and then they will be able to trick us and outwit us and there will be no way to stop them if we program them badly and they decide to take over the world.

It is hard for me to present this argument fairly, because I think it is so unpersuasive and stupid. Versions of it have been discussed before in this magazine (here by computer scientist Benjamin Charles Germain Lee and here by artificial intelligence engineer Ryan Metz). The case for it is made in detail in Nick Bostrom’s book Superintelligence and Stuart Russell’s Human Compatible: Artificial Intelligence and the Problem of Control. Generally, those making the argument focus less on showing that “superintelligent” computers are possible than on showing that if they existed, they could wreak havoc. The comparison is often made to nuclear weapons: if we were living in the age before nuclear weapons, wouldn’t we want to control their development before they came about? After all, it was only by sheer luck that Nazi Germany didn’t get the atomic bomb first. (Forcing all Jewish scientists to flee or be killed also probably contributed to Nazi Germany’s comparative technological disadvantage.)

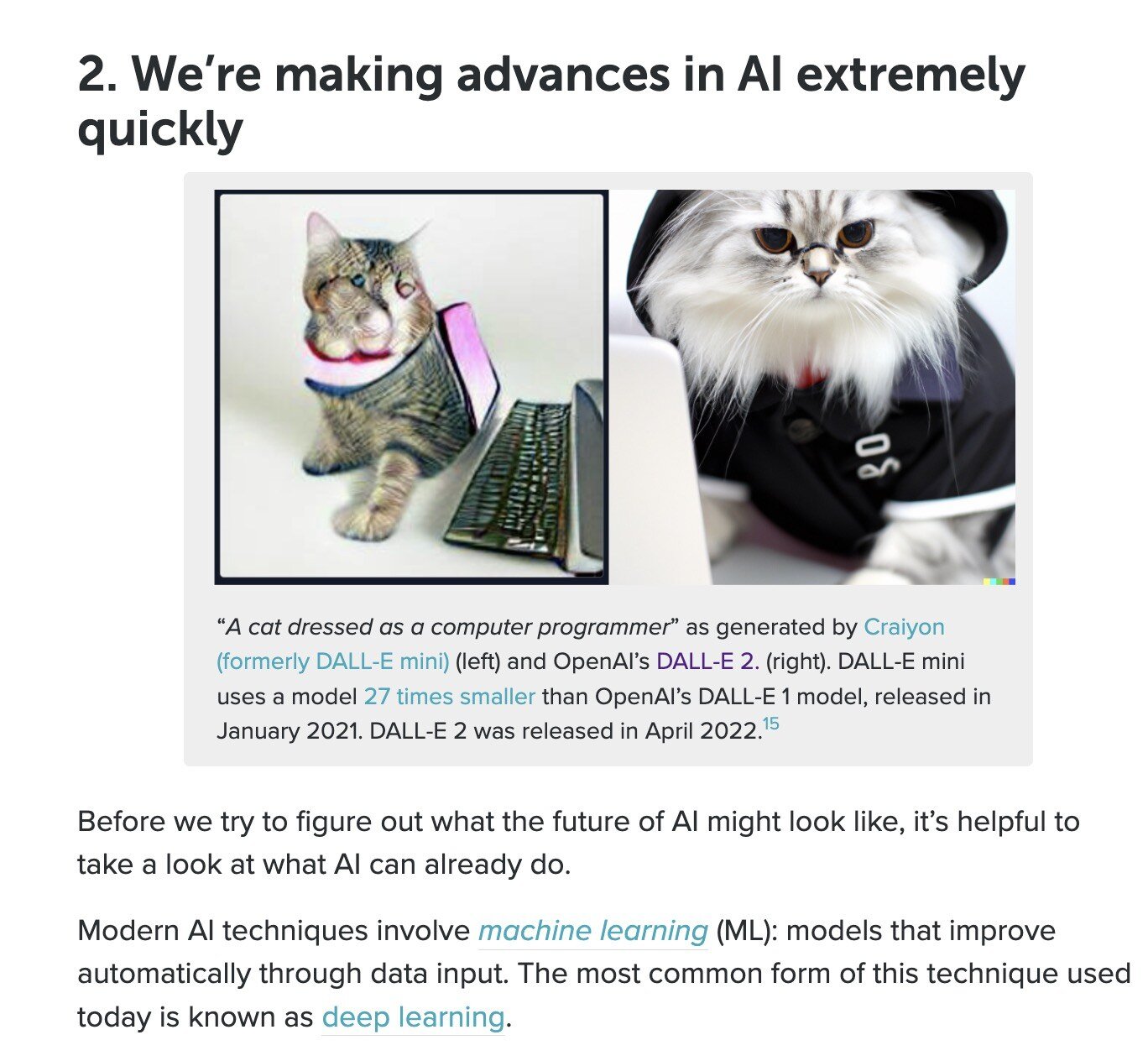

But one reason to be skeptical of the comparison is that in the case of nuclear weapons, while early scientists may have disagreed about whether building them was feasible, those who did think they were feasible could offer an explanation as to how such a bomb could be built. The warnings about dangerous civilization-eating AI do not contain demonstrations that such a technology is possible,9 and rest on unproven assumptions about what algorithms of sufficient complexity would be capable of doing, that are much closer to prophecy or speculative fiction than science.10 80,000 Hours at one point laughably tries to prove that computers might become super-intelligent by showing us that computers are getting much better at generating pictures of cats in hoodies. They warn us that if things continue this way for long, the machines might take over and make humans extinct.

Now, don’t get me wrong: I think so-called “artificial intelligence” (a highly misleading term, incidentally, because a core property of AI is that it’s unintelligent and doesn’t understand what it’s doing) has very scary possibilities. I’ve written before about how the U.S. military is developing utterly insane technologies like “autonomous drone swarms,” giant clouds of flying weaponized robots that can select a target and destroy it. I think the future of some technologies is absolutely terrifying, and that “artificial intelligence” (actually, let’s just say computers) will do things that astonish us within our lifetimes. Computing is getting genuinely impressive. For instance, when I asked an image-generator to produce “Gaudí cars,” it gave me stunning pictures of cars that actually looked like they had been designed by the architect Antoni Gaudí. (I had it make me some Gaudí trains as well.) I’m fascinated by what’s going to happen when music-generating software starts going the way of image-generating software. I suspect we will see within decades programs that can create all new Beatles songs that sound indistinguishable from the actual Beatles (they’re not there yet, but they’re making progress), and you will be able to give the software a series of pieces of music and ask for a new song that is a hybrid of all of them, and get one that sounds extraordinarily good. It’s going to be flabbergasting.

But that doesn’t mean that a computer monster is going to suddenly emerge and eat the world. The fact that pocket calculators can beat humans at math does not mean that if they become really good at math, they might become too smart for their own good and turn on us. If you paint a portrait realistic enough, it doesn’t turn 3-dimensional, develop a mind of its own, and hop out of the frame. But those who think computers pose an “existential risk” quickly start telling stories about all the horrible things that could happen if computers became HAL 9000 or The Terminator’s Skynet. Here’s Karnofsky:

“They could recruit human allies through many different methods—manipulation, deception, blackmail and other threats, genuine promises along the lines of ‘We’re probably going to end up in charge somehow, and we’ll treat you better when we do.’ Human allies could be given valuable intellectual property (developed by AIs), given instructions for making lots of money, and asked to rent their own servers and acquire their own property where an ‘AI headquarters’ can be set up. Since the ‘AI headquarters’ would officially be human property, it could be very hard for authorities to detect and respond to the danger.”

And here’s prominent EA writer Toby Ord, in his book The Precipice: Existential Risk and the Future of Humanity:

“There is good reason to expect a sufficiently intelligent system to resist our attempts to shut it down. This behavior would not be driven by emotions such as fear, resentment, or the urge to survive. Instead, it follows directly from its single-minded preference to maximize its reward: being turned off is a form of incapacitation which would make it harder to achieve high reward, so the system is incentivized to avoid it. In this way, the ultimate goal of maximizing reward will lead highly intelligent systems to acquire an instrumental goal of survival. And this wouldn’t be the only instrumental goal. An intelligent agent would also resist attempts to change its reward function to something more aligned with human values—for it can predict that this would lead it to get less of what it currently sees as rewarding. It would seek to acquire additional resources, computational, physical or human, as these would let it better shape the world to receive higher reward.”

To me, this just reads like (very creative!) dystopian fiction. But you shouldn’t develop your moral priorities by writing spooky stories about conceivable futures. You should look at what is actually happening and what we have good reason to believe is going to happen. Much of the AI scare-stuff uses the trick of made-up meaningless probabilities to try to compensate for the lack of a persuasive theory of how the described technology can actually be developed. (“Okay, so maybe there’s only a small chance. Let’s say 2 percent. But do you want to take that chance?”) From the fact that computers are producing more and more realistic cats, they extrapolate to an eventual computer that can do anything we can do, does not care whether we live or die, and cannot be unplugged. Because this is nightmarish to contemplate, it is easy to assume that we should actually worry about it. But before panicking about monsters under the bed, you should always ask for proof. Stories aren’t enough, even when they’re buttressed with probabilities to make hunches look like hard science.

Worst of all, the focus on artificial intelligence that might kill us all distracts from the real problems that computing will cause as it gets more powerful. Ryan Metz, in his Current Affairs article, discusses how counterfeit images and videos are going to become easier and easier to produce and fakes harder to spot, which could easily worsen our misinformation problem. It will be easier for ordinary people to produce dangerous weapons (although we might also be able to 3D-print some Gaudí cars). Automation will of course disrupt a lot of people’s jobs, and it’s going to be very tough when even artists and designers find themselves being put out of work by art-generating robots. The good news is that if we harness our technologies for good, they may give us lives of ease and pleasure. Which path we go down depends a lot on what kind of socio-political system we end up living under, and it will take a huge struggle to make sure we can achieve very good outcomes rather than awful ones.

Those who fear the “existential risk” of intelligent computers tend to think non-experts like myself are simply unfamiliar with the facts of how things are developing. But I received helpful confirmation in my judgment recently from Timnit Gebru, who has been called “one of the world’s most respected ethical AI researchers.” Gebru served as the co-lead of Google’s ethical AI team, until being forced out of the company after producing a paper raising serious questions about how Google’s artificial intelligence projects could reinforce social injustices. Gebru, a Stanford engineering PhD and co-founder of Black in AI, is deeply familiar with the state of the field, and when I voiced skepticism about EA’s “paranoid fear about a hypothetical superintelligent computer monster” she replied:

“I’m a person having worked on the threats of AI, and actually done something about it. That’s also my profession. And I’m here to tell you that you are correct and what they’re selling is bullshit.”

Gebru is deeply skeptical of EA, even though they ostensibly care about the same thing that she does (social risks posed by artificial intelligence). She points out that EAs “tell privileged people what they want to hear: the way to save humanity is to listen to the privileged and monied rather than those who’ve fought oppression for centuries.” She is scathing in saying that the “bullshit” of “longtermism and effective altruism” is a “religion … that has convinced itself that the best thing to do for ‘all of humanity’ is to throw as much money as possible to the problem of ‘AGI [artificial general intelligence.’” She notes ruefully that EA is hugely popular in Silicon Valley, where hundreds of millions of dollars are raised to allow rich people, mostly white men, to think they’re literally saving the world (by stopping the hypothetical malevolent computer monster).

Gebru points out that there are huge risks to AI, like “being used to make the oil and gas industries more efficient,” and for “criminalizing people, predictive policing, and remotely killing people and making it easier to enter warfare.” But these get us back to our boring old near-term problems—racism, war, climate catastrophe, poverty11—the ones that rank low on the EA priorities list because other people are already working on them and a single brilliant individual will not have much effect on them.12

Gebru’s critique points out the undemocratic and, frankly, racist aspect to a lot of EA thinking.13 EA proponents are not particularly interested in finding out what people who aren’t Oxford philosophy professors think global priorities should be. Peter Singer gave a very awkward answer when it was pointed out to him that all of the thinkers he seemed to respect were white men:

“I have to say, I want to work with people whose ideas are, you know, at a level of discussion that I’m interested in, and that I’m progressing. If you’re thinking of the work of Africans, for example, I don’t know the work of many of them that is really in the same sort of—I’m not quite sure how to put this—participating in the same discussion as the people you’ve just mentioned.”14

In the past, I’ve talked to people who think that while some of Effective Altruism is kooky, at least EAs are sincerely committed to improving the world, and that’s a good thing. But I’m afraid I don’t agree. Good intentions count for very little in my mind. Lots of people who commit evil may have “good intentions”—perhaps Lyndon Johnson really wanted to save the world from Communism in waging the criminal Vietnam war, and perhaps Vladimir Putin really thought he was invading Ukraine to save Russia’s neighbor from Nazis. It doesn’t really matter whether you mean well if you end up doing a bunch of deranged stuff that hurts people, and I can’t praise a movement grounded in a repulsive utilitarian philosophy that has little interest in most of the world’s most pressing near-term concerns and is actively trying to divert bright young idealists who might accomplish some good if they joined authentic grassroots social movements rather than a billionaire-funded cult.15

The truth about doing good is that it doesn’t require much complicated philosophy. What it requires is dedication, a willingness to do things that are unglamorous and tedious. In a movement, you do not get to be a dazzling standout individual who single-handedly changes the world. It’s unsexy. You join with others and add your small drop to the bucket. It’s not a matter of you, but we. There’s already a form of effective altruism, and it’s called socialism. Socialists have been working for a better world for a very long time. Those who want to do “the most good they can” and serve the interests of humanity long-term are welcome to come on board and join the struggle. What are some of the best reasons to be a socialist? For one, the movement isn’t based on morally repugnant arguments that either have to be downplayed or dressed up with evasive language. And secondly, it is inclusive of all people, especially those who have been harmed by capitalism, who want to change the conditions of our society that create, right now, poverty and inequality and species-threatening climate change, to name a few.

Effective Altruism, which reinforces individualism and meritocracy (after all, aren’t those who can do the most good those who have the most to give away?), cannot be redeemed as a philosophy. Nor is it very likely to be successful, since it is constantly having to downplay the true beliefs of its adherents in order to avoid repulsing prospective converts. That is not a recipe for a social movement that can achieve broad popularity. What it may succeed in is getting some people who might otherwise be working on housing justice, criminal punishment reform, climate change, labor organizing, journalism, or education to instead fritter their lives away trying to stop an imaginary future death-robot or facilitate the long-term colonization of space. (Or worse yet, working for a fossil fuel company and justifying it by donating money to stop the death-robot.) It is a shame, because there is plenty of work to be done building a good human future where everyone is cared for. Those who truly want to be both effective and altruistic should ditch EA for good and dive into the hard but rewarding work of growing the global Left.

Of course, this is also a serious moral indictment of all the existing Wall Streeters not donating their income, but this woman apparently had the moral burden of making up for their stinginess. ↩

The highly individualistic focus on the things where you personally make the most difference means that EA discourages people from working on popular causes. 80,000 Hours says: “If lots of people already work on an issue, the best opportunities [!] will have already been taken, which makes it harder to contribute. But that means the most popular issues to work on, like health and education in the US or UK, are probably not where you can do the most good—to have a big impact, you need to find something unconventional.” Yeah, how can you morally justify something low impact like teaching little kids or caring for old people? Teachers, social workers, and nurses are not pursuing what the organization calls “high-impact careers.” ↩

When asked “Should you feed a baby to sharks in order to reduce the probability that a hundred other people will die?” the normal human answer is “Are you crazy?” whereas the utilitarian answers “Well, let’s do the math and see.” Or “shut up and multiply” as one thinker popular among both EA and the neoreactionary right has put it. ↩

The movement’s embrace of hilariously horrible utilitarian conclusions means it’s very easy to imagine an Effective Altruist supervillain. It would also be easy to write an Effective Altruist defense of Stalinism—gotta break those eggs to maximize the long-term moral omelet. I once wrote a kind of short story about an Effective Altruist who becomes a virus researcher after becoming convinced that serving humanity morally necessitates killing most of humanity, and wants to discover a pathogen that will do the trick. But only in order to do the most good he can. Hoel’s article notes that Effective Altruists insist that they do not embrace strict utilitarian ends. For instance, if a billionaire said he would donate enough money to charity to save 1,000 lives, but only if he was allowed to set a homeless man on fire, no moral person could countenance the deal, and I haven’t heard such a thing defended by an EAer. Still, arguments like “Invest In Evil To Do More Good” or Work At McKinsey To Donate To Malaria Prevention are not much different. Indeed, an EA who makes their money on planet-destroying cryptocurrency is just setting more people on fire. ↩

I would again note the deep implicit devaluation of care work that runs through 80,000 Hours’ focus on jobs that supposedly maximize your impact rather than simply make you a helpful part of your community. ↩

Émile P. Torres documents the EA obsession with PR and marketing. Amusingly enough, the New Yorker reports that MacAskill once made a moral argument that he should get cosmetic dentistry, citing research showing that people with nice teeth are more effective persuaders. Thus optimizing his effectiveness in serving the cause would justify, nay necessitate, having the gap between his front teeth fixed. ↩

Nick Bostrom has downplayed the harms of climate change, saying: “In absolute terms, [the climate consequences of “business as usual” emissions] would be a huge harm. Yet over the course of the twentieth century, world GDP grew by some 3,700%, and per capita world GDP rose by some 860%. It seems safe to say that … whatever negative economic effects global warming will have, they will be completely swamped by other factors that will influence economic growth rates in this century.” ↩

MacAskill acknowledges in What We Owe The Future that there is a widely-held intuition that we have an obligation to help the humans that do exist but not a moral obligation to create more humans. He rejects this intuition because he argues that it leads to a logical paradox. More on that is discussed in this highly critical review of MacAskill’s book. Quite a lot turns on the issue, because if you are a utilitarian who thinks Maximizing Future Value Units is the way to think about our core moral task, you will end up, like 80,000 Hours, thinking the avoidance of extinction is much more morally pressing than anything else, and will allocate your time accordingly. ↩

Computer scientist Erik J. Larson, in The Myth of Artificial Intelligence, notes that in contrast to nuclear chain reactions, “theories about mind power evolving out of technology aren’t testable,” and so the superintelligence theories, while “presented as scientific inevitability, are more like imagination pumps: just think if this could be! And there’s no doubt, it would be amazing. Perhaps dangerous. But imagining a what-if scenario stops far short of serious discussion about what’s up ahead.” Larson adds in an interview: “[W]hat the futurists were talking about in the media and everywhere else, and I was thinking like, ‘How are we drawing these conclusions? I’m right here doing this work and we have no clue how to build systems that solve the problems that they say are imminent, that are right around the corner. Not only do they seem not around the corner, there’s a reasonable skepticism that we’re ever going to find a solution short of a major conceptual invention that we can’t foresee.’ … So if you have people declaring that humans are soon to be replaced by artificial intelligence that are far superior … It creates a real disincentive for us to do much to fix things in our own society.” [emphasis added] ↩

There is commonly a poorly-defended assumption that sufficiently great computing power will be able to produce “intelligence” because the human brain is just a fleshy version of a computer, so when machines can match the “computation power” of the human brain they will be able to be “intelligent.” 80,000 Hours writes that: “We may be able to get computers to eventually automate anything humans can do. This seems like it has to be possible—at least in principle. This is because it seems that, with enough power and complexity, a computer should be able to simulate the human brain.” The use of “seems” should raise our suspicions. It does not seem that way to me, because I think that would require us to have a far more detailed understanding of how the human brain works. The dubious idea that a brain isn’t doing anything a computer couldn’t in principle do is often defended with bad arguments. Karnofsky, for instance, says that the fact that computers are not made of organic material is irrelevant to whether they can achieve human-like consciousness, which he proves using a “thought experiment”: if we replaced all of the neurons in our brains with “digital neurons” “made out of the same sorts of things today’s computers are made out of instead of what my neurons are made out of,” there would be no difference between the digital-mind and the organic mind. This, as you will immediately see, proves nothing, because it merely assumes the conclusion it seeks to prove, which is that everything done by the neurons of the brain can in fact be replicated by non-living machines. ↩

There are many sound reasons to care about near-term priorities over million-year long-term ones. My own take is that we can let the next generation worry about the long-term, but our generation is the “pivotal generation” that has a particular set of responsibilities to solve a particularly pressing set of problems. If we succeed, then our descendants will be free to worry about that “long term” stuff, which by definition is not especially pressing right now. The belief that all humans in all time periods and places are equally worthy of your personal moral concern is also open to criticism on the grounds that we have particular obligations that come from being embedded in relationships—for instance, as a citizen of the United States, I have a particular duty to try to keep my country from committing hideous atrocities, and as a resident of a neighborhood, I might have obligations to my neighbors. ↩

It’s worth reiterating how individualistic the EA approach to altruism is. 80,000 Hours emphasizes how you can maximize the difference that your presence makes, and thus encourages people not to pursue the projects where together we can collectively make a huge difference, but instead the projects where I, a single person, maximize the marginal difference caused by my decisions. EA helps you feel important. ↩

Torres notes that “not only has the longtermist community been a welcoming home to people who have worried about ‘dysgenic pressures’ being an existential risk, supported the ‘men’s rights’ movement, generated fortunes off Ponzi schemes and made outrageous statements about underpopulation and climate change, but it seems to have made little effort to foster diversity or investigate alternative visions of the future that aren’t Baconian, pro-capitalist fever-dreams built on the privileged perspectives of white men in the Global North.” ↩

Singer said in the same interview that his new “Journal of Controversial Ideas” was preparing to publish an article drawing “parallels between young-Earth creationism and the ideological views that reject well-established facts in genetics, particularly related to differences in cognitive abilities” and an article on “whether blackface can be acceptable.” ↩

Incidentally, I’ve actually been rather surprised during my six years of running a magazine that no EA person has ever seemed to show any curiosity about the value of building independent media institutions, which perform a vital public service and produce huge “bang for the buck” in terms of their social good. We’re in a terrible time for journalism and media—it obviously needs funding and is a vital part of a healthy democracy—yet it’s nowhere on their priorities list. I have my tongue only partly in my cheek when I say that donating to Current Affairs is an extremely effective form of altruism and that if EA people took their stated principles seriously they would be writing large checks to this magazine right now. I am open to being proven wrong about their sincerity, however, so I invite them to prove that they do in fact believe in their stated principles by writing said checks. ↩