The Dangerous Ideas of “Longtermism” and “Existential Risk”

So-called rationalists have created a disturbing secular religion that looks like it addresses humanity’s deepest problems, but actually justifies pursuing the social preferences of elites.

In a late-2020 interview with CNBC, Skype cofounder Jaan Tallinn made a perplexing statement. “Climate change,” he said, “is not going to be an existential risk unless there’s a runaway scenario.” A “runaway scenario” would occur if crossing one or more critical thresholds in the climate system causes Earth’s thermostat to rise uncontrollably. The hotter it has become, the hotter it will become, via self-amplifying processes. This is probably what happened a few billion years ago on our planetary neighbor Venus, a hellish cauldron whose average surface temperature is high enough to melt lead and zinc.

Fortunately, the best science today suggests that a runaway scenario is unlikely, although not impossible. Yet even without a runaway scenario, the best science also frighteningly affirms that climate change will have devastating consequences. It will precipitate lethal heatwaves, megadroughts, catastrophic wildfires (like those seen recently in the Western U.S.), desertification, sea-level rise, mass migrations, widespread political instability, food-supply disruptions/famines, extreme weather events (more dangerous hurricanes and flash floods), infectious disease outbreaks, biodiversity loss, mass extinctions, ecological collapse, socioeconomic upheaval, terrorism and wars, etc. To quote an ominous 2020 paper co-signed by more than 11,000 scientists from around the world, “planet Earth is facing a climate emergency” that, unless immediate and drastic action is taken, will bring about “untold suffering.”

So why does Tallinn think that climate change isn’t an existential risk? Intuitively, if anything should count as an existential risk it’s climate change, right?

Cynical readers might suspect that, given Tallinn’s immense fortune of an estimated $900 million, this might be just another case of a super-wealthy tech guy dismissing or minimizing threats that probably won’t directly harm him personally. Despite being disproportionately responsible for the climate catastrophe, the super-rich will be the least affected by it. Peter Thiel—the libertarian who voted for a climate-denier in 2016—has his “apocalypse retreat” in New Zealand, Richard Branson owns his own hurricane-proof island, Jeff Bezos bought some 400,000 acres in Texas, and Elon Musk wants to move to Mars. Astoundingly, Reid Hoffman, the multi-billionaire who cofounded LinkedIn, reports that “more than 50 percent of Silicon Valley’s billionaires have bought some level of ‘apocalypse insurance,’ such as an underground bunker.”

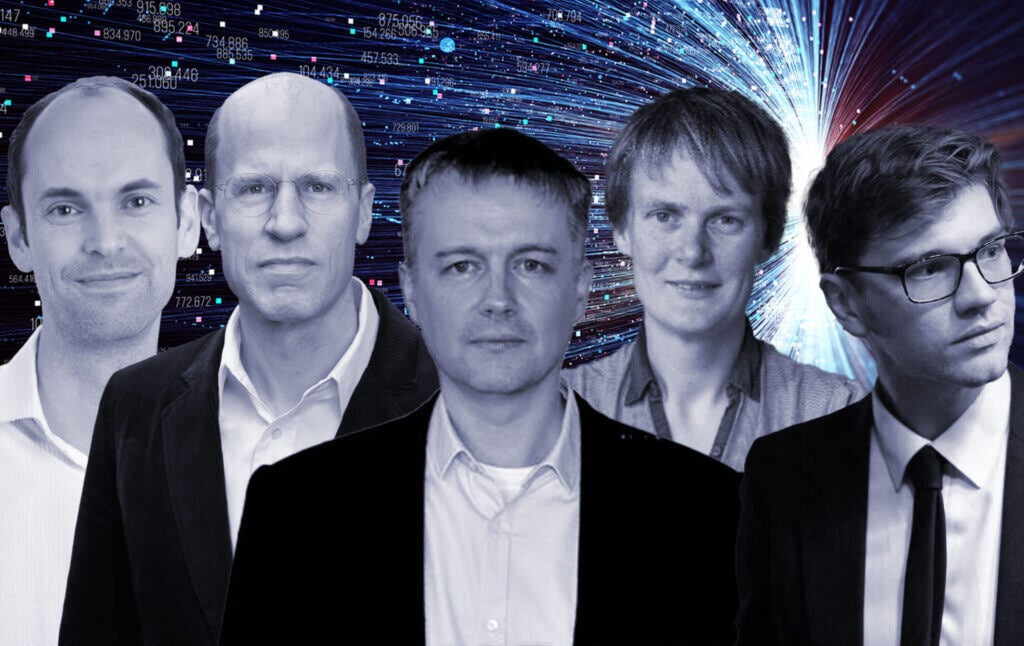

That’s one possibility, for sure. But I think there’s a deeper reason for Tallinn’s comments. It concerns an increasingly influential moral worldview called longtermism. This has roots in the work of philosopher Nick Bostrom, who coined the term “existential risk” in 2002 and, three years later, founded the Future of Humanity Institute (FHI) based at the University of Oxford, which has received large sums of money from both Tallinn and Musk. Over the past decade, “longtermism” has become one of the main ideas promoted by the “Effective Altruism” (EA) movement, which generated controversy in the past for encouraging young people to work for Wall Street and petrochemical companies in order to donate part of their income to charity, an idea called “earn to give.” According to the longtermist Benjamin Todd, formerly at Oxford University, “longtermism might well turn out to be one of the most important discoveries of effective altruism so far.”

Longtermism should not be confused with “long-term thinking.” It goes way beyond the observation that our society is dangerously myopic, and that we should care about future generations no less than present ones. At the heart of this worldview, as delineated by Bostrom, is the idea that what matters most is for “Earth-originating intelligent life” to fulfill its potential in the cosmos. What exactly is “our potential”? As I have noted elsewhere, it involves subjugating nature, maximizing economic productivity, replacing humanity with a superior “posthuman” species, colonizing the universe, and ultimately creating an unfathomably huge population of conscious beings living what Bostrom describes as “rich and happy lives” inside high-resolution computer simulations.

This is what “our potential” consists of, and it constitutes the ultimate aim toward which humanity as a whole, and each of us as individuals, are morally obligated to strive. An existential risk, then, is any event that would destroy this “vast and glorious” potential, as Toby Ord, a philosopher at the Future of Humanity Institute, writes in his 2020 book The Precipice, which draws heavily from earlier work in outlining the longtermist paradigm. (Note that Noam Chomsky just published a book also titled The Precipice.)

The point is that when one takes the cosmic view, it becomes clear that our civilization could persist for an incredibly long time and there could come to be an unfathomably large number of people in the future. Longtermists thus reason that the far future could contain way more value than exists today, or has existed so far in human history, which stretches back some 300,000 years. So, imagine a situation in which you could either lift 1 billion present people out of extreme poverty or benefit 0.00000000001 percent of the 1023 biological humans who Bostrom calculates could exist if we were to colonize our cosmic neighborhood, the Virgo Supercluster. Which option should you pick? For longtermists, the answer is obvious: you should pick the latter. Why? Well, just crunch the numbers: 0.00000000001 percent of 1023 people is 10 billion people, which is ten times greater than 1 billion people. This means that if you want to do the most good, you should focus on these far-future people rather than on helping those in extreme poverty today. As the FHI longtermists Hilary Greaves and Will MacAskill—the latter of whom is said to have cofounded the Effective Altruism movement with Toby Ord—write, “for the purposes of evaluating actions, we can in the first instance often simply ignore all the effects contained in the first 100 (or even 1,000) years, focussing primarily on the further-future effects. Short-run effects act as little more than tie-breakers.”

This brings us back to climate change, which is expected to cause serious harms over precisely this time period: the next few decades and centuries. If what matters most is the very far future—thousands, millions, billions, and trillions of years from now—then climate change isn’t going to be high up on the list of global priorities unless there’s a runaway scenario. Sure, it will cause “untold suffering,” but think about the situation from the point of view of the universe itself. Whatever traumas and miseries, deaths and destruction, happen this century will pale in comparison to the astronomical amounts of “value” that could exist once humanity has colonized the universe, become posthuman, and created upwards of 1058 (Bostrom’s later estimate) conscious beings in computer simulations. Bostrom makes this point in terms of economic growth, which he and other longtermists see as integral to fulfilling “our potential” in the universe:

“In absolute terms, [non-runaway climate change] would be a huge harm. Yet over the course of the twentieth century, world GDP grew by some 3,700%, and per capita world GDP rose by some 860%. It seems safe to say that … whatever negative economic effects global warming will have, they will be completely swamped by other factors that will influence economic growth rates in this century.”

In the same paper, Bostrom declares that even “a non-existential disaster causing the breakdown of global civilization is, from the perspective of humanity as a whole, a potentially recoverable setback,” describing this as “a giant massacre for man, a small misstep for mankind.” That’s of course cold comfort for those in the crosshairs of climate change—the residents of the Maldives who will lose their homeland, the South Asians facing lethal heat waves above the 95-degree F wet-bulb threshold of survivability, and the 18 million people in Bangladesh who may be displaced by 2050. But, once again, when these losses are juxtaposed with the apparent immensity of our longterm “potential,” this suffering will hardly be a footnote to a footnote within humanity’s epic biography.

These aren’t the only incendiary remarks from Bostrom, the Father of Longtermism. In a paper that founded one half of longtermist research program, he characterizes the most devastating disasters throughout human history, such as the two World Wars (including the Holocaust), Black Death, 1918 Spanish flu pandemic, major earthquakes, large volcanic eruptions, and so on, as “mere ripples” when viewed from “the perspective of humankind as a whole.” As he writes:

“Tragic as such events are to the people immediately affected, in the big picture of things … even the worst of these catastrophes are mere ripples on the surface of the great sea of life.”

In other words, 40 million civilian deaths during WWII was awful, we can all agree about that. But think about this in terms of the 1058 simulated people who could someday exist in computer simulations if we colonize space. It would require trillions and trillions and trillions of WWIIs one after another to even approach the loss of these unborn people if an existential catastrophe were to happen. This is the case even on the lower estimates of how many future people there could be. Take Greaves and MacAskill’s figure of 1018 expected biological and digital beings on Earth alone (meaning that we don’t colonize space). That’s still a way bigger number than 40 million—analogous to a single grain of sand next to Mount Everest.

It’s this line of reasoning that leads Bostrom, Greaves, MacAskill, and others to argue that even the tiniest reductions in “existential risk” are morally equivalent to saving the lives of literally billions of living, breathing, actual people. For example, Bostrom writes that if there is “a mere 1 percent chance” that 1054 conscious beings (most living in computer simulations) come to exist in the future, then “we find that the expected value of reducing existential risk by a mere one billionth of one billionth of one percentage point is worth a hundred billion times as much as a billion human lives.” Greaves and MacAskill echo this idea in a 2021 paper by arguing that “even if there are ‘only’ 1014 lives to come … , a reduction in near-term risk of extinction by one millionth of one percentage point would be equivalent in value to a million lives saved.”

To make this concrete, imagine Greaves and MacAskill in front of two buttons. If pushed, the first would save the lives of 1 million living, breathing, actual people. The second would increase the probability that 1014 currently unborn people come into existence in the far future by a teeny-tiny amount. Because, on their longtermist view, there is no fundamental moral difference between saving actual people and bringing new people into existence, these options are morally equivalent. In other words, they’d have to flip a coin to decide which button to push. (Would you? I certainly hope not.) In Bostrom’s example, the morally right thing is obviously to sacrifice billions of living human beings for the sake of even tinier reductions in existential risk, assuming a minuscule 1 percent chance of a larger future population: 1054 people.

All of this is to say that even if billions of people were to perish in the coming climate catastrophe, so long as humanity survives with enough of civilization intact to fulfill its supposed “potential,” we shouldn’t be too concerned. In the grand scheme of things, non-runaway climate change will prove to be nothing more than a “mere ripple” —a “small misstep for mankind,” however terrible a “massacre for man” it might otherwise be.

Even worse, since our resources for reducing existential risk are finite, Bostrom argues that we must not “fritter [them] away” on what he describes as “feel-good projects of suboptimal efficacy.” Such projects would include, on this account, not just saving people in the Global South—those most vulnerable, especially women—from the calamities of climate change, but all other non-existential philanthropic causes, too. As the Princeton philosopher Peter Singer writes about Bostrom in his 2015 book on Effective Altruism, “to refer to donating to help the global poor … as a ‘feel-good project’ on which resources are ‘frittered away’ is harsh language.” But it makes perfectly good sense within Bostrom’s longtermist framework, according to which “priority number one, two, three, and four should … be to reduce existential risk.” Everything else is smaller fish not worth frying.

If this sounds appalling, it’s because it is appalling. By reducing morality to an abstract numbers game, and by declaring that what’s most important is fulfilling “our potential” by becoming simulated posthumans among the stars, longtermists not only trivialize past atrocities like WWII (and the Holocaust) but give themselves a “moral excuse” to dismiss or minimize comparable atrocities in the future. This is one reason that I’ve come to see longtermism as an immensely dangerous ideology. It is, indeed, akin to a secular religion built around the worship of “future value,” complete with its own “secularised doctrine of salvation,” as the Future of Humanity Institute historian Thomas Moynihan approvingly writes in his book X-Risk. The popularity of this religion among wealthy people in the West—especially the socioeconomic elite—makes sense because it tells them exactly what they want to hear: not only are you ethically excused from worrying too much about sub-existential threats like non-runaway climate change and global poverty, but you are actually a morally better person for focusing instead on more important things—risk that could permanently destroy “our potential” as a species of Earth-originating intelligent life.

To drive home the point, consider an argument from the longtermist Nick Beckstead, who has overseen tens of millions of dollars in funding for the Future of Humanity Institute. Since shaping the far future “over the coming millions, billions, and trillions of years” is of “overwhelming importance,” he claims, we should actually care more about people in rich countries than poor countries. This comes from a 2013 PhD dissertation that Ord describes as “one of the best texts on existential risk,” and it’s cited on numerous Effective Altruist websites, including some hosted by the Centre for Effective Altruism, which shares office space in Oxford with the Future of Humanity Institute. The passage is worth quoting in full:

“Saving lives in poor countries may have significantly smaller ripple effects than saving and improving lives in rich countries. Why? Richer countries have substantially more innovation, and their workers are much more economically productive. By ordinary standards—at least by ordinary enlightened humanitarian standards—saving and improving lives in rich countries is about equally as important as saving and improving lives in poor countries, provided lives are improved by roughly comparable amounts. But it now seems more plausible to me that saving a life in a rich country is substantially more important than saving a life in a poor country, other things being equal.”

Never mind the fact that many countries in the Global South are relatively poor precisely because of the long and sordid histories of Western colonialism, imperialism, exploitation, political meddling, pollution, and so on. What hangs in the balance is astronomical amounts of “value.” What shouldn’t we do to achieve this magnificent end? Why not prioritize lives in rich countries over those in poor countries, even if gross historical injustices remain inadequately addressed? Beckstead isn’t the only longtermist who’s explicitly endorsed this view, either. As Hilary Greaves states in a 2020 interview with Theron Pummer, who co-edited the book Effective Altruism with her, if one’s “aim is doing the most good, improving the world by the most that I can,” then although “there’s a clear place for transferring resources from the affluent Western world to the global poor … longtermist thought suggests that something else may be better still.”

Returning to climate change once again, we can see how Tallinn got the idea that our environmental impact probably isn’t existentially risky from academic longtermists like Bostrom. As alluded to above, Bostrom maintains that non-runaway (which he calls “moderate”) global warming, as well as “threats to the biodiversity of Earth’s ecosphere,” as “endurable” rather than “terminal” for humanity. Similarly, Ord claims in The Precipice that climate change poses a mere 1-in-1,000 chance of existential catastrophe, in contrast to a far greater 1-in-10 chance of catastrophe involving superintelligent machines (dubbed the “Robopocalypse” by some). Although, like Bostrom, Ord acknowledges that the climate crisis could get very bad, he assures us that “the typical scenarios of climate change would not destroy our potential.”

Within the billionaire world, these conclusions have been parroted by some of the most powerful men on the planet today (not just Tallinn). For example, Musk, an admirer of Bostrom’s who donated $10 million in 2015 to the Future of Life Institute, another longtermist organization that Tallinn cofounded, said in an interview this year that his “concern with the CO2 is not kind of where we are today or even … the current rate of carbon generation.” Rather, the worry is that “if carbon generation keeps accelerating and … if we’re complacent then I think … there’s some risk of sort of non-linear climate change”—meaning, one surmises, a runaway scenario. Peter Thiel has also apparently held this view for some time, which is unsurprising given his history with longtermist thinking and the Effective Altruism movement. (He gave the keynote address at the 2013 Effective Altruism Summit.) But Thiel also declared in 2014: “People are spending way too much time thinking about climate change” and “way too little thinking about AI.”

The reference to AI, or “artificial intelligence,” here is important. Not only do many longtermists believe that superintelligent machines pose the greatest single hazard to human survival, but they seem convinced that if humanity were to create a “friendly” superintelligence whose goals are properly “aligned” with our “human goals,” then a new Utopian age of unprecedented security and flourishing would suddenly commence. This eschatological vision is sometimes associated with the “Singularity,” made famous by futurists like Ray Kurzweil, which critics have facetiously dubbed the “techno-rapture” or “rapture of the nerds” because of its obvious similarities to the Christian dispensationalist notion of the Rapture, when Jesus will swoop down to gather every believer on Earth and carry them back to heaven. As Bostrom writes in his Musk-endorsed book Superintelligence, not only would the various existential risks posed by nature, such as asteroid impacts and supervolcanic eruptions, “be virtually eliminated,” but a friendly superintelligence “would also eliminate or reduce many anthropogenic risks” like climate change. “One might believe,” he writes elsewhere, that “the new civilization would [thus] have vastly improved survival prospects since it would be guided by superintelligent foresight and planning.”

Tallinn makes the same point during a Future of Life Institute podcast recorded this year. Whereas a runaway climate scenario is at best many decades away, if it could happen at all, Tallinn speculates that superintelligence will present “an existential risk in the next 10 or 50 years.” Thus, he says, “if you’re going to really get AI right [by making it ‘friendly’], it seems like all the other risks [that we might face] become much more manageable.” This is about as literal an interpretation of “deus ex machina” as one can get, and in my experience as someone who spent several months as a visiting scholar at the Centre for the Study of Existential Risk, which was cofounded by Tallinn, it’s a widely-held view among longtermists. In fact, Greaves and MacAskill estimate that every $100 spent on creating a “friendly” superintelligence would be morally equivalent to “saving one trillion [actual human] lives,” assuming that an additional 1024 people could come to exist in the far future. Hence, they point out that focusing on superintelligence gets you a way bigger bang for your buck than, say, preventing people who exist right now from contracting malaria by distributing mosquito nets.

What I find most unsettling about the longtermist ideology isn’t just that it contains all the ingredients necessary for a genocidal catastrophe in the name of realizing astronomical amounts of far-future “value.” Nor is it that this religious ideology has already infiltrated the consciousness of powerful actors who could, for example, “save 41 [million] people at risk of starvation” but instead use their wealth to fly themselves to space. Even more chilling is that many people in the community believe that their mission to “protect” and “preserve” humanity’s “longterm potential” is so important that they have little tolerance for dissenters. These include critics who might suggest that longtermism is dangerous, or that it supports what Frances Lee Ansley calls white supremacy (given the implication, outlined and defended by Beckstead, that we should prioritize the lives of people in rich countries). When one believes that existential risk is the most important concept ever invented, as someone at the Future of Humanity Institute once told me, and that failing to realize “our potential” would not merely be wrong but a moral catastrophe of literally cosmic proportions, one will naturally be inclined to react strongly against those who criticize this sacred dogma. When you believe the stakes are that high, you may be quite willing to use extraordinary means to stop anyone who stands in your way.

By reducing morality to an abstract numbers game, and by declaring that what’s most important is fulfilling “our potential” by becoming simulated posthumans among the stars, longtermists not only trivialize past atrocities like WWII (and the Holocaust) but give themselves a “moral excuse” to dismiss or minimize comparable atrocities in the future.

In fact, numerous people have come forward, both publicly and privately, over the past few years with stories of being intimidated, silenced, or “canceled.” (Yes, “cancel culture” is a real problem here.) I personally have had three colleagues back out of collaborations with me after I self-published a short critique of longtermism, not because they wanted to, but because they were pressured to do so from longtermists in the community. Others have expressed worries about the personal repercussions of openly criticizing Effective Altruism or the longtermist ideology. For example, the moral philosopher Simon Knutsson wrote a critique several years ago in which he notes, among other things, that Bostrom appears to have repeatedly misrepresented his academic achievements in claiming that, as he wrote on his website in 2006, “my performance as an undergraduate set a national record in Sweden.” (There is no evidence that this is true.) The point is that, after doing this, Knutsson reports that he became “concerned about his safety” given past efforts to censure certain ideas by longtermists with clout in the community.

This might sound hyperbolic, but it’s consistent with a pattern of questionable behavior from leaders in the Effective Altruism movement more generally. For example, one of the first people to become an Effective Altruist after the movement was born circa 2009, Simon Jenkins, reports an incident in which he criticized an idea within Effective Altruism on a Facebook group run by the community. Within an hour, not only had his post been deleted but someone who works for the Centre for Effective Altruism actually called his personal phone to instruct him not to question the movement. “We can’t have people posting anything that suggests that Giving What We Can [an organization founded by Ord] is bad,” as Jenkins recalls. These are just a few of several dozen stories that people have shared with me after I went public with some of my own unnerving experiences.

All of this is to say that I’m not especially optimistic about convincing longtermists that their obsession with our “vast and glorious” potential (quoting Ord again) could have profoundly harmful consequences if it were to guide actual policy in the world. As the Swedish scholar Olle Häggström has disquietingly noted, if political leaders were to take seriously the claim that saving billions of living, breathing, actual people today is morally equivalent to negligible reductions in existential risk, who knows what atrocities this might excuse? If the ends justify the means, and the “end” in this case is a veritable techno-Utopian playground full of 1058 simulated posthumans awash in “the pulsing ecstasy of love,” as Bostrom writes in his grandiloquent “Letter from Utopia,” would any means be off-limits? While some longtermists have recently suggested that there should be constraints on which actions we can take for the far future, others like Bostrom have literally argued that preemptive violence and even a global surveillance system should remain options for ensuring the realization of “our potential.” It’s not difficult to see how this way of thinking could have genocidally catastrophic consequences if political actors were to “[take] Bostrom’s argument to heart,” in Häggström’s words.

I should emphasize that rejecting longtermism does not mean that one must reject long-term thinking. You ought to care equally about people no matter when they exist, whether today, next year, or in a couple billion years henceforth. If we shouldn’t discriminate against people based on their spatial distance from us, we shouldn’t discriminate against them based on their temporal distance, either. Many of the problems we face today, such as climate change, will have devastating consequences for future generations hundreds or thousands of years in the future. That should matter. We should be willing to make sacrifices for their wellbeing, just as we make sacrifices for those alive today by donating to charities that fight global poverty. But this does not mean that one must genuflect before the altar of “future value” or “our potential,” understood in techno-Utopian terms of colonizing space, becoming posthuman, subjugating the natural world, maximizing economic productivity, and creating massive computer simulations stuffed with 1045 digital beings (on Greaves and MacAskill’s estimate if we were to colonize the Milky Way).

Care about the long term, I like to say, but don’t be a longtermist. Superintelligent machines aren’t going to save us, and climate change really should be one of our top global priorities, whether or not it prevents us from becoming simulated posthumans in cosmic computers.

Although a handful of longtermists have recently written that the Effective Altruism movement should take climate change more seriously, among the main reasons given for doing so is that, to quote an employee at the Centre for Effective Altruism, “by failing to show a sufficient appreciation of the severity of climate change, EA may risk losing credibility and alienating potential effective altruists.” In other words, community members should talk more about climate change not because of moral considerations relating to climate justice, the harms it will cause to poor people, and so on, but for marketing reasons. It would be “bad for business” if the public were to associate a dismissive attitude about climate change with Effective Altruism and its longtermist offshoot. As the same author reiterates later on, “I agree [with Bostrom, Ord, etc.] that it is much more important to work on x-risk … , but I wonder whether we are alienating potential EAs by not grappling with this issue.”

Yet even if longtermists were to come around to “caring” about climate change, this wouldn’t mean much if it were for the wrong reasons. Knutsson says:

“Like politicians, one cannot simply and naively assume that these people are being honest about their views, wishes, and what they would do. In the Effective Altruism and existential risk areas, some people seem super-strategic and willing to say whatever will achieve their goals, regardless of whether they believe the claims they make—even more so than in my experience of party politics.”

Either way, the damage may already have been done, given that averting “untold suffering” from climate change will require immediate action from the Global North. Meanwhile, millionaires and billionaires under the influence of longtermist thinking are focused instead on superintelligent machines that they believe will magically solve the mess that, in large part, they themselves have created.