We’ve all heard it a thousand times—robots are coming for your job. You may think you’re safe, but you’re not. Sooner or later, the robots will show up at your workplace and coldly order you to clear out your desk. Are you a paralegal? A Palo Alto company called Nextlaw Labs has developed an AI that can read documents and conduct legal research. Are you a Wall Street portfolio manager? A Cambridge-MIT startup called Kensho has written an algorithm that’s much better at picking stocks than you are. Are you a fast food worker? A San Francisco company called Momentum Machines has invented a mechanized burger-flipper, which makes gourmet burgers at “affordable prices” that would be “impossible without culinary automation.” All in all, Oxford University researchers have estimated that 47% of U.S. jobs, including “transportation and logistics occupations, together with the bulk of office and administrative support workers, and labour in production occupations,” are at high risk of automation in the near future.

All right, fine, you think—I hated that job anyway. Let the robots have it! If my toaster oven wishes to draft legal briefs and manage stock portfolios in addition to its other work, I see no reason why I shouldn’t permit it.

But soon the follow-up question dawns: how am I going to feed my family if I’m being outcompeted by robots in every field? As it is, the odds are rather stacked against us. If it’s quicker to program a robot than to train a human, and if it’s cheaper to maintain a robot than to pay a human, and if robots are even slightly more productive than humans, then the free market will ensure that most work is increasingly done by robots. The few individuals who own the robots will only need to share the profits of their enterprise with an increasingly small skeleton crew of employees. The rest of the population, meanwhile, will continue to depend on the goods and services produced by the robots, so they’ll have very little bargaining-power on their side. Income inequality will balloon exponentially. All capital will gradually be absorbed by the robot-masters, and will be diverted to their weird pet projects, most of which revolve around trying to ensure that rich people will live forever, despite the fact that the mortality of the rich is one of the few consolations the rest of us have.

The robots are coming, ready to remake our world according to the specifications of a cabal of millennial asswipes and megalomaniacal billionaires. These are the people who are destined to become our feudal overlords in the Age of Robotics. The question is: is there any way to stop them?

There are a few ways to approach the problem of a robot economy. One is to reject increased automation of labor as generally undesirable, because people need jobs and robots are tricky devils. The 19th century had an unsuccessful but memorable tradition of anti-machine fervor, from the Luddite machine-breaking riots of the 1810s, to this magnificently sinister pronouncement by Samuel Butler in 1863:

“Our opinion is that war to the death should be instantly proclaimed against [machines]. Every machine of every sort should be destroyed by the well-wisher of his species. Let there be no exceptions made, no quarter shown; let us at once go back to the primeval condition of the race. If it be urged that this is impossible under the present condition of human affairs, this at once proves that the mischief is already done, that our servitude has commenced in good earnest, that we have raised a race of beings whom it is beyond our power to destroy, and that we are not only enslaved but are absolutely acquiescent in our bondage.”

Some people, of course, might regard this whole “war to the death against anything more complicated than an abacus” proposal as extreme. But full-on Unabomber-style technophobia aside, there’s an argument to be made that limits on automation ought to be established by regulation, in order to allow human beings to continue to work. This is not only because U.S. society, as currently constituted, makes livelihood contingent on employment, but also because work—so the argument goes—is generally a good thing for human beings. In his 2015 encyclical Laudato Si’, for example, Pope Francis wrote that we shouldn’t endeavor to have “technological progress increasingly replace human work,” as this outcome would, in his view, “be detrimental to humanity. Work is a necessity, part of the meaning of life on this earth, a path to growth, human development and personal fulfillment.” This line of thought is also fairly common within labor movements, because it holds that all occupations are equal in dignity, that a construction worker is no less deserving of respect, and entitled to a decent living, than a software engineer. Even if you could automate all labor, you wouldn’t want to, because human identity and psychological well-being are inextricably bound up in the work we do, and mechanization deprives people of the ability to contribute to their communities. The important thing is simply to make sure that workers are properly paid and protected.

On the other end of the spectrum, we have a competing theory that the more work can be done by machines, the better—all you need is some means of ensuring that most human beings are able to enjoy the benefits of machine labor, and then they’ll be free to dispose of their time however they please, which is better than forcing people to have “jobs.” Take, for example, Oscar Wilde’s 1891 essay The Soul of Man Under Socialism, which advocated vigorously for increased automation—partly for moral reasons, and partly because Wilde thought manual labor was kind of gross. “To sweep a slushy crossing for eight hours,” he wrote, “is a disgusting occupation. To sweep it with mental, moral, or physical dignity seems to me to be impossible. To sweep it with joy would be appalling. Man is made for something better than disturbing dirt. All work of that kind should be done by a machine.” In Wilde’s view, humanity ought instead to spend its time “amusing itself, or enjoying cultivated leisure… or making beautiful things, or reading beautiful things, or simply contemplating the world with admiration and delight.”

If one agrees with this framing of the problem, then our main goal is not to prevent automation, but to somehow separate work from livelihood, or at the very least from subsistence. One can imagine a world in which the Silicon Valleyites build useful robots, and the rest of us collect a Universal Basic Income from the government, allowing us to purchase the robot-created goods and services. This Universal Basic Income would presumably be derived from taxes; tax policy would thus determine whether the UBI was a robust redistributive mechanism or, say, a disproportionate subsidization of the poor by the somewhat less poor. Either way, most people wouldn’t have to work for their daily bread, and, in that respect, would have greater autonomy over their day-to-day pursuits than in a human labor economy.

Both of these arguments, broadly sketched, make morally and psychologically compelling claims. On the pro-automation side, more machines mean that fewer humans will be condemned to devote their lives to soul-crushing and life-threatening occupations. There seems to me to be no morally admissible counterargument against this, especially given that these kinds of jobs fall almost exclusively to the most vulnerable and disempowered members of society. On the other hand, the idea that there should be, effectively, no truly contributive societal role for anybody who isn’t, say, a scientist or an artist, seems deeply problematic. (And I think anybody who believes that all human beings will miraculously transform into scientists and artists as soon as they are Properly Educated is (a) probably wrong, and (b) greatly underestimating how fucking irritating that fictitious society would be to live in.) Work is integral to many people’s sense of fulfilment and purpose, and a lot of us prefer to have that work structured, at least loosely, in the mode of a “job.” Some might argue that paeans to the virtuousness of work are the result of corporate brainwashing by The Man, or are some kind of socially-entrained delusion arising from our nation’s vestigial Protestantism. But I believe that this pro-work sentiment has much deeper roots. It is fundamentally about the wish for a particular vocation, which speaks to a simultaneous desire to take pleasure in one’s own accomplishments, and for those accomplishments to contribute to some larger and more important good than individual satisfaction.

You can, of course, easily disagree with the pro-work argument, or believe that most people will still “work” meaningfully in a fully-automated society—they just won’t have “jobs” whose terms are dictated by third parties. But even then, we will still have to contend with the additional problem that lack of direct human involvement in the nuts and bolts of society generates serious disparities in the distribution of political power. If an automated society can run itself, without input from most humans, then most humans are not going to have any impact whatsoever on decision-making processes—and they have little enough as it is. In a human labor economy, ordinary people could at least refuse to work, and thus imperil their bosses’ operations. In a robot economy, ordinary people will not even have this option.

Our ultimate goal, then, is to establish a mixed system where most people are doing meaningful work, of a type they like doing; where work has social value, but no one will be ostracized or suffer serious deprivation if they choose to stop working; where all the work that’s necessary to ensure the material sustenance of the population is continually being accomplished by some means; and where those means, insofar as they are automated, are not controlled by the corporate descendants (and/or the reanimated cryo-cadavers) of Peter Thiel, Elon Musk, and their ilk. Seems doable!

As we think about what increased automation ought to look like, in an ideal world where the economy serves human needs, we should first disabuse ourselves of the idea that efficiency is an appropriate metric for assessing the value of an automated system. Sure, efficiency can be a good thing—no sane person wants an inefficient ambulance, for example—but it isn’t a good in itself. (After all, an efficient self-disembowling machine doesn’t thereby become a “good” self-disembowling machine.) In innumerable contexts, inefficient systems and unpredictable forces give our lives character, variety, and suspense. The ten-minute delay on your morning train, properly considered, is a surprise gift from the universe, being an irreproachable excuse to read an extra chapter of a new novel. Standing in line at the pharmacy is an opportunity to have a short conversation with a stranger, who may be going through a hard time, or who may have something interesting to tell you. The unqualified worship of efficiency is a pernicious kind of idolatry. Often, the real problem isn’t that our world isn’t efficient enough, but rather that we lack patience, humility, curiosity, and compassion: these are not failings in our external environments, but in ourselves.

Additionally, we can be sure that, left to themselves, Market Forces will prioritize efficiencies that generate profit over efficiencies that really generate maximum good to humans. We currently see, for instance, a constant proliferation of labor-saving devices and services that are mostly purchased by fairly well-off people. This isn’t to say that these products are always completely worthless—to the extent that a Roomba reduces an overworked single parent’s unpaid labor around the home, for example, that might be quite a good thing—but in other respects, these minute improvements in efficiency (or perceived efficiency) generate diminished returns for the well-being of the human population, and usually only a very small percentage of it, to boot. The amount of energy that’s put into building apps and appliances to replace existing things that already work reasonably well is surely a huge waste of ingenuity, in a world filled with pressing social problems that need many more hands on deck.

Thinking about which kinds of jobs and systems really ought to be automated, however, can require complicated and nuanced assessments. Two possible baseline standards, for example, would be that robots should only do jobs at which they are equally good or better than humans, and/or that robots should only do jobs that are difficult, dangerous, or unfulfilling for humans. In some cases, these standards would be fairly straightforward to apply. A robot will likely never be able to write a novel to the same standard as a human writer, for example, so it doesn’t make sense to try to replace novelists with louchely-attired cyborgs. On the other hand, robots could quite conceivably be designed to bake cakes, compose generic pop songs, and create inscrutable canvases for major contemporary art museums—but to the extent that humans enjoy being bakers, pop stars, and con artists, we shouldn’t automate those jobs, either.

For certain professions, however, estimating the relative advantages of human labor versus robot labor is rather difficult by either of these metrics. For example, some commentators have predicted we’ll see a marked increase in robot “caregivers” for the elderly. In many ways, this would be a wonderful development. For elders who have health and mobility impairments, but are otherwise mentally acute, having robots that can help you out of bed, steady you in the shower, and chauffeur you to your destinations might mean several more decades of independent living. Robots could make it much easier for people to care for their aging loved ones in their own homes, rather than putting them in some kind of facility. And within institutional settings like nursing homes, hospitals, and hospices, it would be an excellent thing to have robots that can do hygienic tasks, like cleaning bedpans, or physically dangerous ones, like lifting heavy patients (nurses have a very high rate of back injury).

At the same time, the idea of fully automated elder care has troubling implications. There’s no denying that caring for declining elders can be difficult and often unpleasant work; anybody who has spent time in medical facilities knows that nursing staffs are overworked, and that individual nurses can be incompetent and profoundly unsympathetic. One might well argue that a caregiver robot, while not perfect, is still better than an exhausted or outright hostile human caregiver; and thus, that the pros of substituting robots for humans across a wide array of caregiving tasks outweigh the cons. However, as a society that already marginalizes and warehouses the elderly—especially the elderly poor—we ought to feel queasy about consigning them to an existence where the little human interaction that remains to them is increasingly replaced by purpose-built machines. Aging can be a time of terrible loneliness and isolation: imagine the misery of a life where no fellow-human ever again touches you with affection, or even basic friendliness. The problem is not just that caring for elders is often difficult, but that the humans who currently do it are undervalued and underpaid, despite the fact that they bear the immense burden of buttressing our shaky social conscience. It would be a lot easier to manufacture caregiver robots than to improve working conditions for human caregivers, but a robot can’t possibly substitute for a nurse who is actually kind, empathetic, and good at their job.

These sorts of concerns are common to most of the “caring” and educational professions, which are usually labor-intensive, time-consuming, poorly compensated, and insufficiently respected. Automating these jobs is an easy shortcut to meaningfully improving them. For example, people like Netflix CEO Reed Hastings think that “education software” is a reasonable alternative to a human-run classroom, despite the fact that the only real purpose of primary education, when it comes down to it, is to teach children about social interaction. (Do you remember anything substantive that you learned in school before, say, age 15? I sure don’t.) And what job is more difficult than parenting? If a robot caregiver is cheaper than a nanny and more reliable than a babysitter, we’d be foolish to suppose that indifferent, career-focused, or otherwise overtasked parents won’t readily choose to have robots mind their children for long periods of time. Automation may well be more cost-effective and easier to implement than better conditions for working parents, or government payouts that would allow people to be full-time parents to their small children, or healthier social attitudes generally about work-family balance. But with teaching and parenting, as with nursing, it’s intuitively obvious that software and robots are in no sense truly equivalent to humans. Rather than automating these jobs, we should be thinking about how to materially improve conditions for people who work in them, with the aim of making their work easier where possible, and rewarding them appropriately for the aspects of their work that are irremediably difficult. Supplementing some aspects of human labor with automated labor can be part of this endeavor, but it can’t be the whole solution.

Additionally, without better labor standards for human workers, determining which kinds of jobs humans actually don’t want to do becomes rather tricky. Some jobs are perhaps miserable by their very nature, but others are miserable because the people who currently perform them lack benefits and protections. We may all have assumptions and biases here that are not necessarily instructive. We might often, like Oscar Wilde, think primarily of manual labor when we’re imagining which jobs should be automated. Very likely there are some forms of manual labor so monotonous, painful, or unpleasant that nobody on earth would voluntarily choose to do them—we can certainly think of a few jobs, like mining, that are categorically and unconscionably dangerous for human workers. But it is also an undeniable fact that many people genuinely like physical labor. It’s even possible that many more people like physical labor than are fully aware of it, due to the class-related prejudices associated with manual work—why on earth else do so many people who work white-collar jobs derive their entire sense of self-worth from running marathons or lifting weights or riding stationary bicycles in sweaty, rubber-scented rooms? Why do so many retirees take up gardening? Why do some lunatics regularly clean their houses as a form of relaxation? Clearly, physical labor can be very satisfying under the right circumstances. The point is, there are some jobs we might intuitively think are morally imperative to automate, because in their current forms, they are undeniably awful. But if we had more humane labor laws, and altered our societal expectations about the appropriate relationship between work and leisure, they might be jobs people actually liked. We know that being a fruit-picker, for example, is an awful profession when you’re working grueling hours, threatened with deportation, and exposed to dangerous pesticides—but it could conceivably be enjoyable work under better conditions.

Of course, in areas such as agriculture, there might be a separate imperative towards automation, if more efficient food production, distribution, and disposal had important implications for overall human health and well-being. Theoretically, if humans were simply not as good as automatons at these tasks, or if there weren’t enough willing humans to do the job to a high standard, then automation might be morally justified. But there would still be value in having human workers built into these systems: we could imagine some kind of centralized food distribution system that is largely automated, but where willing human farmers build local food production capacity, as a bulwark against corruption or disaster in the centralized system. (It seems, in any case, like a real societal risk not to have enough people with practical subsistence-level skills in the population, as systems are always subject to failure.) In an ideal world, then, we wouldn’t have robots replace humans in any job that can be turned into a job that a human actually wants to do, unless this increased efficiency would have quite substantial benefits for the general population, or for some vulnerable subpopulation. In this instance, however, the benefits of this increased efficiency would also have to be weighed against the inherent danger of an excess concentration of power in the owners of the automated systems. If it isn’t possible for such a centralized system to be administered as a public utility, by accountable officials, automation might well not be worth the risk, and ought to be resisted.

We thus return continually to the problem of political power, which is a hard one: in real life, after all, automation is very unlikely to be implemented in accordance with abstract moral principles, and there’s no clear path towards public ownership of important automated industries. To that end, our only means of controlling automation will likely be by establishing restrictions on it, while continuing to advocate for robust, ambitious labor reforms. Automation-specific policy goals might include things like human worker quotas (in industries where this would be sensible and humane), outright bans on certain kinds of automation (such as in caring professions), and some form of universal basic income or equivalent social welfare scheme. Another conceivable tactic would be to impose a heavy tax on automation, calculated based on how many human workers would have been needed to perform equivalent work. This tax revenue could then be used to fund other jobs—in particular, the kinds of community-based and public interest jobs that are desperately needed, but that so-called “job creators” have never found it much worth their while to create.

It’s important not to allow each other to be beguiled by Silicon Valley’s purportedly humanitarian pledge to manufacture a world in which we never again have to sit in traffic, or take out our own garbage, or feel pain, or be bored, or get sick, or die. We can argue about whether such a world is desirable, but it’s fairly obvious that it’s not achievable anytime in the near future, and that, to the extent that it is achievable, it will be achievable chiefly by those who are already rich. And honestly, what good will it do them anyhow? Silicon Valley, after all, is populated by people who already have every imaginable material convenience at their fingertips: they inhabit a world that’s pretty damn close to the one they’re promising to build for us, and yet they still aren’t satisfied. Mark Zuckerberg, whose net worth is $50 billion, wrote a letter to his newborn daughter in which he stated that “advancing human potential is about pushing the boundaries on how great a human life can be” and expressed his hope that his child could “learn and experience 100 times more than we do today” and “have access to every idea, person, and opportunity.” This, when you stop to think about it for a second, is demented. What in God’s name would it even feel like to experience “100 times more” than today’s humans? Mightn’t that actually turn out to be awful? Why do we have to have access to every idea in the universe? Hasn’t existing social media wrecked people’s nerves badly enough as it is? In any case, merely ensuring that every human has access to the most elementary building-blocks of happiness—food on the table, a roof over their head, work they enjoy, and people who love them—has already proved an insurmountable task for our species to date. Let’s not get ahead of ourselves.

Meanwhile, other Silicon Valleyites’ darker preoccupations with things like immortality serums, “uploading” their consciousnesses to the cloud, or creating island principalities on which to conduct experiments in governance, suggests that for these people—who are ultimately as frail, frightened, and discontented as the rest of us, for all their wealth and intelligence—human life as it currently exists is merely the raw material of something altogether better and longer and more intense that they imagine could be. It’s hard to say which is worse, the kind of Silicon Valleyites with no social conscience, who create start-ups purely for accolades and cash, or the kind of Silicon Valleyites who want to use the entire planet as fodder for their personal spiritual journeys. I realize that I am a prematurely elderly curmudgeon, but I don’t trust these people, and neither should you. There are aspects of an increasingly automated future that are deeply appealing. But if we can’t have it on our terms, we had better turn it down.

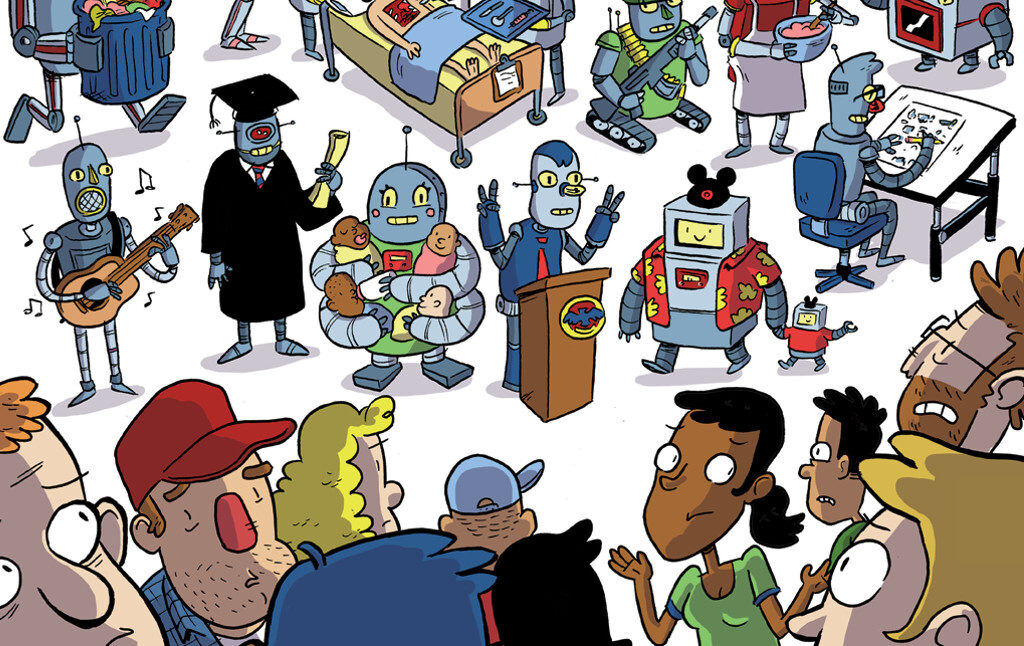

Robot illustrations by Pranas Naujokaitis. This article appears as part of our new Silicon Valley Spectacular. Get your print copy today in our online shop, or by subscribing.